DSN-2026: Artifacts Call For Contributions

DSN supports open science, where authors of accepted papers are encouraged to make their tools and datasets publicly available to ensure reproducibility and replicability by other researchers. New this year, DSN 2026 will offer a separate artifact evaluation track to all accepted papers from all three categories of the research track. The goals of the artifact track are to (1) increase confidence in a paper’s claims and results, and (2) facilitate future research via publicly available datasets and tools.

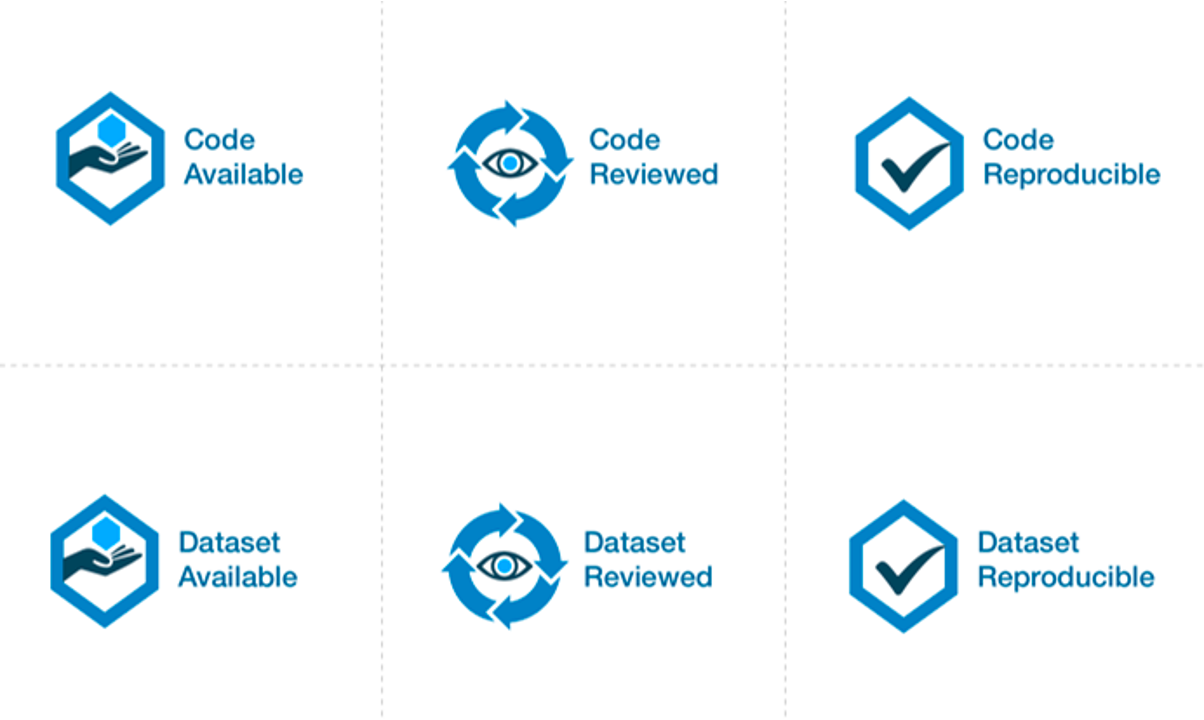

Badges

The availability of artifacts accompanying the papers will be denoted by badges. Badges will appear on the page of the paper in the digital library. Since DSN is an IEEE-sponsored conference, it follows the scheme of the IEEE Xplore digital library (see https://ieeexplore.ieee.org/Xplorehelp/overview-of-ieee-xplore/about-content#reproducibility-badges). Accordingly, DSN will award the following three types of badges:

- Available: The code and/or datasets, including any associated data and documentation, provided by the authors is reasonable and complete and can potentially be used to support reproducibility of the published results.

- Reviewed: The code and/or datasets, including any associated data and documentation, provided by the authors is reasonable and complete, runs to produce the outputs described, and can support reproducibility of the published results.

- Reproducible: This badge signals that an additional step was taken or facilitated to certify that an independent party has regenerated computational results using the author-created research objects, methods, code, and conditions of analysis. Reproducible assumes that the research objects were also reviewed.

The “Reviewed” badge implies that the artifact also qualifies for the “Available” badge. The “Reproducible” badge subsumes both the “Reviewed” and “Available” badges. Authors can apply for all of the three types (i.e., the artifact is Available, Reviewed, and Reproducible).

Artifacts can be Code or Datasets. The same research paper can be accompanied by both Code and Datasets.

IEEE Xplore also allows a fourth type of badge (“Replicated”). This fourth badge is only for replication studies performed by other authors, and will not be awarded as part of this artifact evaluation process.

Artifact Submissions

At the time of the submission of the research paper, authors must indicate whether they intend to submit an artifact for their submission. At a later deadline (see the “Important Dates” below), authors can separately submit the actual artifact, through a dedicated form on HotCRP.

The link for submitting artifacts will be provided here soon.

Please note that we require that the artifact should be submitted either through Zenodo (https://zenodo.org/) or Figshare (https://figshare.com/). They are two very popular open-access repositories adopted by computer science conferences, which assure long-term archival storage. These repositories can provide a DOI, i.e., a fixed, persistent identifier for the artifact, that provides a more stable link than directly using a URL. Please note that artifacts should not be submitted through GitHub or other software development platforms. Of course, you are also free to share a copy of your artifact through these platforms, but we require that the artifact is submitted and shared through Zenodo or Figshare for long-term archival storage and better interoperability.

We remark that information about the artifact submission and its review will not be shared with the PC of the research track.

Conflicts of Interest

Authors and AEC members are asked to declare potential conflicts during the paper submission and reviewing process. In particular, a conflict of interest must be declared under any of the following conditions: (1) anyone who shares an institutional affiliation with an author at the time of submission, (2) anyone the author has collaborated or published within the last two years, (3) anyone who was the advisor or advisee of an author, or (4) is a relative or close personal friend of the authors. For other forms of conflict and related questions, authors must explain the perceived conflict to the track chairs.

AEC members who have conflicts of interest with a paper, including program co-chairs, will be excluded from any discussion concerning the paper.

Guidelines for submitting/reviewing artifacts

As guidance for submitting your artifact, please consider the following points. The Artifact Evaluation Committee will also consider these points to assign badges.

For the "Available" badges:

- Is the artifact publicly available through an open-access repository (Zenodo or Figshare)?

- Is the artifact consistent and complete with respect to the paper?

- Does it provide sufficient user documentation (e.g., command-line syntax)?

- Can it potentially be used to support reproducibility of the paper (even if you could not run the artifact)?

- Does it include a license that allows researchers to reuse and extend the artifact (e.g., for comparison purposes in a future paper)? Creative Commons licenses are a typical choice for open data.

For the "Reviewed" badges:

- Does the artifact include enough documentation about configuration and installation (e.g., on external dependencies, supported environments)?

- Does it include instructions for a "minimum working example", and could you run it?

- Does the artifact include documentation about its internals (e.g., organization of modules and folders, code comments for explaining non-obvious code) that is understandable for other researchers?

For the "Reproducible" badges:

- Does the documentation of the artifact explain which claims of the paper it can reproduce, and how to reproduce them?

- Can you run the analysis automatically, with a reasonable effort and time/resource requirements?

- Do the results of the execution support the claims of the paper?

Additional suggestions:

- You are allowed to provide your artifact as a virtual machine. Even in that case, you should still provide source code and scripts that were used to build the virtual machine.

- Please minimize the number of dependencies and the amount of hardware resources needed to run the artifact.

- Please provide clear step-by-step instructions to install and run the artifacts. Remember to test them on a clear environment!

- When providing instructions to users and reviewers, please provide the expected outputs (or any other side effect) of these instructions, and the estimated amount of human and compute time.

See also:

- Artifact Evaluation Guidelines for DSN AEC

- Artifact Evaluation: Tips for Authors – Primordial Loop (padhye.org)

- HOWTO for AEC Submitters - Google Docs

Artifact Evaluation Process

The artifacts will be evaluated by a dedicated Artifacts Evaluation (AE) committee through a single-blind review process, where authors should be available to respond quickly during the artifact evaluation.

The artifact evaluation process is restricted to accepted papers in the research track of DSN (including PER and Tool papers). The evaluation will begin after the review process is complete and acceptance decisions have been made by the research track PC. The research PC chairs will make the submitted paper available to the Artifact Evaluation committee. The information about the artifact evaluation is NOT shared with the research PC in any form.

Evaluation starts with a “kick-the-tires” period, during which evaluators ensure they can access their assigned artifacts and perform basic operations such as building and running a minimal working example. Artifact evaluations include feedback about the artifact, giving authors the option to address any significant blocking issues for AE work using this feedback. After the kick-the-tires stage ends, communication can only address interpretation concerns for the produced results or minor syntactic issues in the submitted materials.

We recommend authors to present and document artifacts in a way that the evaluation committee can use it and complete the evaluation successfully with minimal (and ideally no) interaction. To ensure that your instructions are complete, we suggest that you run through them on a fresh setup prior to submission, following exactly the instructions you have provided.

We expect that most evaluations can be done on any moderately-recent desktop or laptop computer. In other cases and to the extent possible, authors have to arrange their artifacts so as to run in community research testbeds or will provide remote access to their systems (e.g., via SSH) with proper anonymization. If the artifact is aimed at full reproducibility of results, but they take a long time to obtain (e.g., because of a large number of experiments, such as in fault injection), authors should provide a shortcut or sampling mechanism.

Distinguished Artifact Award

All artifacts submitted will compete for a “Distinguished Artifact Award” to be decided by the committee. This will be awarded to the artifact that (1) has the highest degree of reproducibility as well as ease of use and documentation, (2) allows other researchers to easily build upon the artifact’s functionality for their own research, and (3) substantially supports the claims of the paper. We anticipate that at most one artifact (paper) would get the award, though the committee reserves the right not to award any artifact in a given year if none of them meet the criteria for the award.

Important dates

The following list reports the important dates for the Artifact Evaluation (AE), in bold. The list also includes important dates for the research track of DSN.

- October 2025: AE committee opens for nominations

- January 2026: AE committee finalized

- December 4, 2025: Research paper submissions

- March 19, 2026: Research Track author notification

- March 26, 2026: Artifact submission deadline

- Beginning of April 2026: end of the 1st AE phase (“kick-the-tires”)

- April 28, 2026: AE finalized, distinguished artifact award notified

* All dates refer to AoE time (Anywhere on Earth) *

Volunteering for the Artifact Evaluation Committee

We welcome (self-)nominations to join the Artifact Evaluation Committee. If you are interested, please fill out the form at: https://forms.gle/uFT2iJEfoqEQ6uwGA.

AEC members will contribute to the conference by reviewing companion artifacts of papers accepted in the research track. We invite early-career researchers to join the AEC, including PhD students (e.g., in their second or later years of study), who are working in any topic area covered by DSN. See the Research Track Call for Contributions (https://dsn2026.github.io/cfpapers.html) for details. Participating in the AEC will allow you to familiarize yourself with research papers accepted for publication at DSN 2026 and to support experimental reproducibility and open-science practices.

For a given artifact, you will be asked to evaluate its public availability, functionality, and/or ability to reproduce the results from the paper. You will be able to discuss with other AEC members and anonymously interact with the authors as necessary, for instance, if you are unable to get the artifact to work as expected. Finally, you will provide a review for the artifact to give constructive feedback to its authors, discuss the artifact with fellow reviewers, and help award the paper artifact evaluation badges. You can be located anywhere in the world, as all committee discussions will happen online.

We expect each member to evaluate 1-2 artifacts, and each evaluation is expected to take approximately 4 hours. AEC members are expected to allocate time to bid for artifacts they wish to review, read the respective papers, evaluate and review the corresponding artifacts, and be available for online discussion (if needed). Please ensure that you have sufficient time and availability (see also the indicative important dates). Please also ensure you will be able to carry out the evaluation confidentially and independently, without sharing artifacts or related information with others, and limiting all the discussions to within the AEC.

Artifact Track Co-Chairs:

Xugui Zhou, Louisiana State University, USA João R. Campos, University of Coimbra, Portugal Roberto Natella, Gran Sasso Science Institute (GSSI), ItalyContact

For further information or any issues please send an email to artifacts@dsn.org